After having worked on this for about 8 years, I am very glad that our paper Foundations of Structural Causal Models with Cycles and Latent Variables has finally been published! Behind a paywall at projecteuclid.org/journals/annal… and openly accessible at arxiv.org/abs/1611.06221

Here is an Euler's identity. It does not look like much at first, but if you stare enough, you notice two mysterious things about it: 1. All coefficients are ±1 2. The RHS is very sparse: only O(√n) terms have degree at most n. Why does it happen (+why should we care)? (1/) pic.twitter.com/nqmY7hXYwJ

I just published the cleaned-up official #CVPR2022 latex template on @overleaf – might it save you some precious time :) Best of luck to all your submissions! 🍀 overleaf.com/latex/template…

How to network in a virtual conference?🕸️ Excited to attend your first (virtual) conference? How do we meet new people and expand the professional network? Some ideas that I found useful 👇👇👇

In case it's helpful: Resources on learning causality "from scratch", assuming prior math background. (we used this for a reading-group last year; I highly recommend): First, start with this short lecture for the philosophical motivations: bayes.cs.ucla.edu/BOOK-2K/causal… Then 1/

If you are not a native English (NNE) speaker and writing introductions for papers is hard for you, this thread is for you. 1/10 pic.twitter.com/WF28UA0JsX

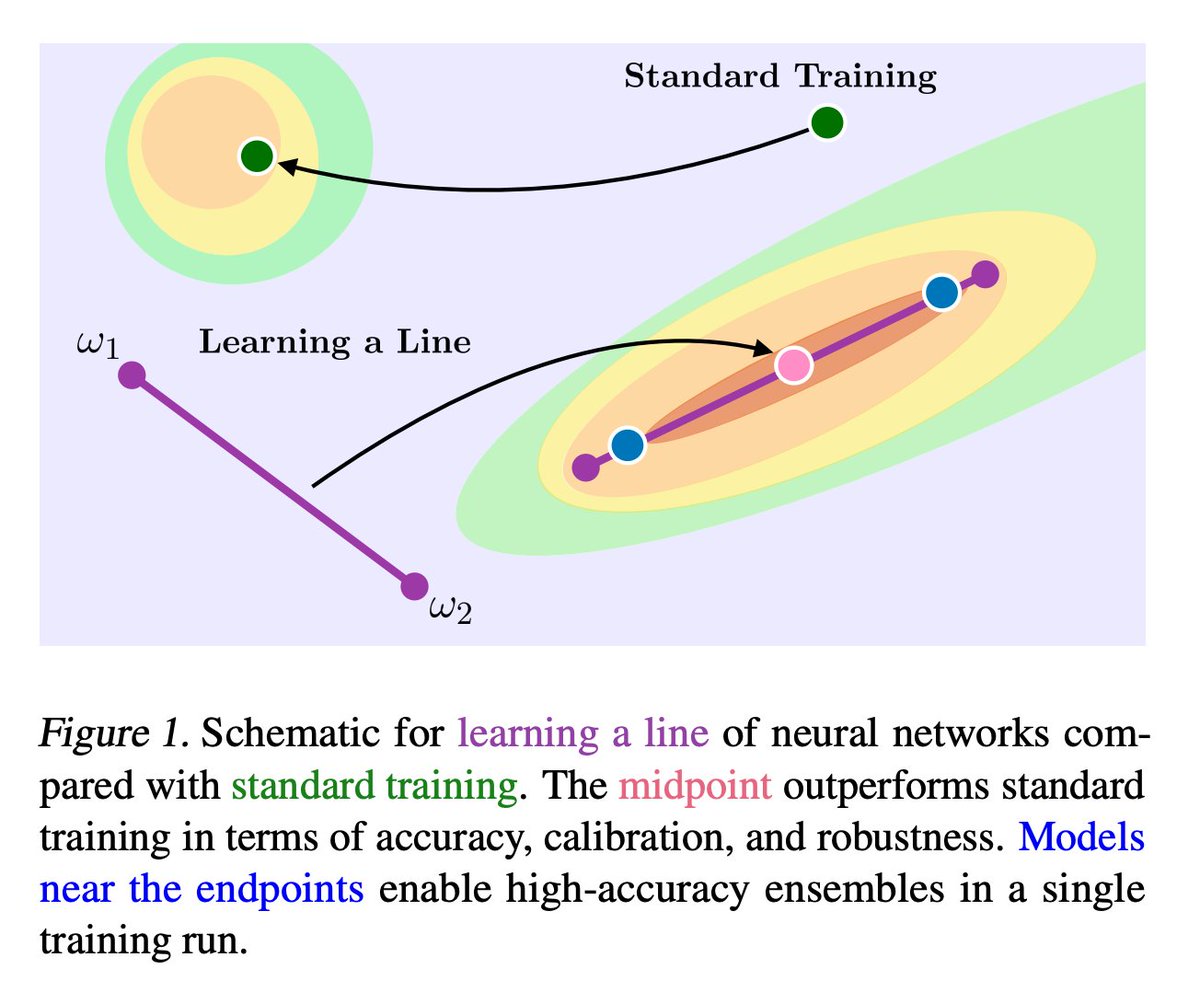

Sharing some takeaways from Learning Neural Network Subspaces, a fun project we learned a lot from and hopefully you can too. (1/n) tldr: We train lines, curves, and simplexes of neural networks from scratch arxiv: arxiv.org/abs/2102.10472 code: github.com/apple/learning… #ICML2021 pic.twitter.com/wj76CeU6d8

Some great lectures on AI and ML from Oxford University. A thread... [1/32] @CompSciOxford @UniofOxford

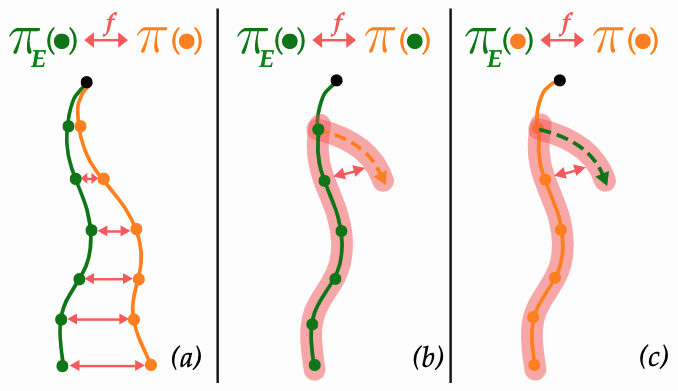

Ridiculously excited to share our #ICML2021 paper, "Of Moments and Matching: A Game Theoretic Framework for Closing the Imitation Gap", (w/ @zstevenwu , Sanjiban Choudhury and Drew Bagnell). We provide a unifying framework for imitation learning via the lens of game theory. [1/N] pic.twitter.com/z1hvtX0Dfv

It is widely believed that adversarial training hurts accuracy. Actually, accuracy goes up when datasets are properly augmented with adversarial examples. "Adversarial data augmentation" is model agnostic, and uses adversarial training to get SOTA on ImageNet, graphs, NLP, etc.

YouTube channels that'll make you smarter: Vsauce SciShow Big Think CGP Grey Tom Scott Veritasium Polymatter Qualiasoup Kurzgesagt Mark Rober Numberphile Crash Course ASAP Science Minute Physics PBS Space Time Smarter Everyday Reply with more channels